Table of Contents

Perhaps the most common misconception about textures is that textures are pictures: images of skin, rock, or something else that you can look at in an image editor. While it is true that many textures are pictures of something, it would be wrong to limit your thoughts in terms of textures to just being pictures. Sadly, this way of thinking about textures is reinforced by OpenGL; data in textures are “colors” and many functions dealing with textures have the word “image” somewhere in them.

The best way to avoid this kind of thinking is to have our first textures be of those non-picture types of textures. So as our introduction to the world of textures, let us define a problem that textures can solve without having to be pictures.

We have seen that the Gaussian specular function is a pretty useful specular function. Its shininess value has a nice range (0, 1], and it produces pretty good results visually. It has fewer artifacts than the less complicated Blinn-Phong function. But there is one significant problem: Gaussian is much more expensive to compute. Blinn-Phong requires a single power-function; Gaussian requires not only exponentiation, but also an inverse-cosine function. This is in addition to other operations like squaring the exponent.

Let us say that we have determined that the Gaussian specular function is good but too expensive for our needs.[7] So we want to find a way to get the equivalent quality of Gaussian specular but with more performance. What are our options?

A common tactic in optimizing math functions is a look-up table. These are arrays of some dimensionality that represents a function. For any function F(x) , where x is valid over some range [a, b], you can define a table that stores the results of the function at various points along the valid range of x. Obviously if x has an infinite range, there is a problem. But if x has a finite range, one can decide to take some number of values on that range and store them in a table.

The obvious downside of this approach is that the quality you get depends on how large this table is. That is, how many times the function is evaluated and stored in the table.

The Gaussian specular function takes three parameters: the surface normal, the half-angle vector, and the specular shininess of the surface. However, if we redefine the specular function in terms of the dot-product of the surface normal and the half-angle vector, we can reduce the number of parameters to two. Also, the specular shininess is a constant value across a mesh. So, for any given mesh, the specular function is a function of one parameter: the dot-product between the half-angle vector and the surface normal.

So how do we get a look-up table to the shader? We could use the obvious method; build a uniform buffer containing an array of floats. We would multiply the dot-product by the number of entries in the table and pick a table entry based on that value. By now, you should be able to code this.

But lets say that we want another alternative; what else can we do? We can put our look-up table in a texture.

A texture is an object that contains one or more arrays of data, with all of the arrays having some dimensionality. The storage for a texture is owned by OpenGL and the GPU, much like they own the storage for buffer objects. Textures can be accessed in a shader, which fetches data from the texture at a specific location within the texture's arrays.

The arrays within a texture are called images; this is a legacy term, but it is what they are called. Textures have a texture type; this defines characteristics of the texture as a whole, like the number of dimensions of the images and a few other special things.

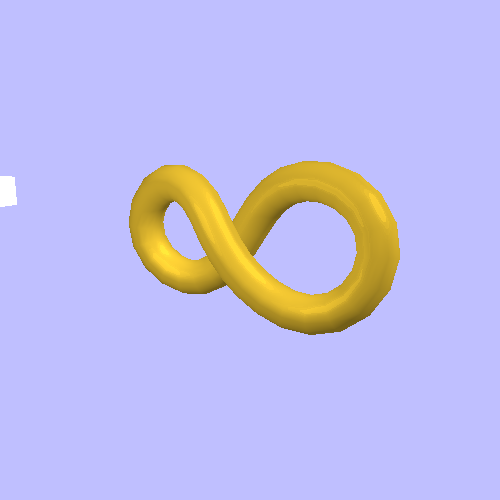

Our first use of textures is in the Basic Texture tutorial. This tutorial shows a scene containing a golden infinity symbol, with a directional light and a second moving point light source.

The camera and the object can be rotated using the left and right mouse buttons respectively. Pressing the Spacebar toggles between shader-based Gaussian specular and texture-based specular. The 1 through 4 keys switch to progressively larger textures, so that you can see the effects that higher resolution look-up tables has on the visual result.

In order to understand how textures work, let's follow the data from our initial

generation of the lookup tables to how the GLSL shader accesses them. The function

BuildGaussianData generates the data that we want to put

into our OpenGL texture.

Example 14.1. BuildGaussianData function

void BuildGaussianData(std::vector<GLubyte> &textureData,

int cosAngleResolution)

{

textureData.resize(cosAngleResolution);

std::vector<GLubyte>::iterator currIt = textureData.begin();

for(int iCosAng = 0; iCosAng < cosAngleResolution; iCosAng++)

{

float cosAng = iCosAng / (float)(cosAngleResolution - 1);

float angle = acosf(cosAng);

float exponent = angle / g_specularShininess;

exponent = -(exponent * exponent);

float gaussianTerm = glm::exp(exponent);

*currIt++ = (GLubyte)(gaussianTerm * 255.0f);

}

}

This function fills a std::vector with bytes that

represents our lookup table. It's a pretty simple function. The parameter

cosAngleResolution specifies the number of entries in the

table. As we iterate over the range, we convert them into cosine values and then

perform the Gaussian specular computations.

However, the result of this computation is a float, not a GLubyte. Yet our array contains bytes. It is here that we must introduce a new concept widely used with textures: normalized integers.

A normalized integer is a way of storing floating-point values on the range [0, 1] in far fewer than the 32-bits it takes for a regular float. The idea is to take the full range of the integer and map it to the [0, 1] range. The full range of an unsigned integer is [0, 255]. So to map it to a floating-point range of [0, 1], we simply divide the value by 255.

The above code takes the gaussianTerm and converts it into a

normalized integer.

This saves a lot of memory. By using normalized integers in our texture, we save 4x the memory over a floating-point texture. When it comes to textures, oftentimes saving memory improves performance. And since this is supposed to be a performance optimization over shader computations, it makes sense to use a normalized integer value.

The function CreateGaussianTexture calls

BuildGaussianData to generate the array of normalized

integers. The rest of that function uses the array to build the OpenGL texture

object:

Example 14.2. CreateGaussianTexture function

GLuint CreateGaussianTexture(int cosAngleResolution)

{

std::vector<GLubyte> textureData;

BuildGaussianData(textureData, cosAngleResolution);

GLuint gaussTexture;

glGenTextures(1, &gaussTexture);

glBindTexture(GL_TEXTURE_1D, gaussTexture);

glTexImage1D(GL_TEXTURE_1D, 0, GL_R8, cosAngleResolution, 0,

GL_RED, GL_UNSIGNED_BYTE, &textureData[0]);

glTexParameteri(GL_TEXTURE_1D, GL_TEXTURE_BASE_LEVEL, 0);

glTexParameteri(GL_TEXTURE_1D, GL_TEXTURE_MAX_LEVEL, 0);

glBindTexture(GL_TEXTURE_1D, 0);

return gaussTexture;

}

The glGenTextures function creates a single texture object,

similar to other glGen* functions we have seen.

glBindTexture attaches the texture object to the context.

The first parameter specifies the texture's type. Note that once you have bound a

texture to the context with a certain type, it must always be

bound with that same type. GL_TEXTURE_1D means that the texture

contains one-dimensional images.

The next function, glTexImage1D is how we allocate storage

for the texture and pass data to the texture. It is similar to

glBufferData, though it has many more parameters. The first

specifies the type of the currently bound texture. As with buffer objects, multiple

textures can be bound to different texture type locations. So you could have a

texture bound to GL_TEXTURE_1D and another bound to

GL_TEXTURE_2D. But it's really bad form to try to exploit

this. It is best to just have one target bound at a time.

The second parameter is something we will talk about in the next tutorial. The third parameter is the format that OpenGL will use to store the texture's data. The fourth parameter is the width of the image, which corresponds to the length of our lookup table. The fifth parameter must always be 0; it represents an old feature no longer supported.

The last three parameters of all functions of the form

glTexImage* are special. They tell OpenGL how to read the

texture data in our array. This seems redundant, since we already told OpenGL what

the format of the data was with the third parameter. This bears further

examination.

Textures and buffer objects have many similarities. They both represent memory owned by OpenGL. The user can modify this memory with various functions. Besides the fact that a texture object can contain multiple images, the major difference is the arrangement of data as it is stored by the GPU.

Buffer objects are linear arrays of memory. The data stored by OpenGL must be

binary-identical to the data that the user specifies with

glBuffer(Sub)Data calls. The format of the data stored in a

buffer object is defined externally to the buffer object itself. Buffer objects used

for vertex attributes have their formats defined by

glVertexAttribPointer. The format for buffer objects that

store uniform data is defined by the arrangement of types in a GLSL uniform

block.

There are other ways that use buffer objects that allow OpenGL calls to fill them with data. But in all cases, the binary format of the data to be stored is very strictly controlled by the user. It is the user's responsibility to make sure that the data stored there uses the format that OpenGL was told to expect. Even when OpenGL itself is generating the data being stored in it.

Textures do not work this way. The format of an image stored in a texture is controlled by OpenGL itself. The user tells it what format to use, but the specific arrangements of bytes is up to OpenGL. This allows different hardware to store textures in whatever way is most optimal for accessing them.

Because of this, there is an intermediary between the data the user provides and

the data that is actually stored in the texture. The data the user provides must be

transformed into the format that OpenGL uses internally for the texture's data.

Therefore, glTexImage* functions must specify both the expected

internal format and a description of how the texture data is stored in the user's

array.

Pixel Transfer and Formats. This process, the conversion between an image's internal format and a user-provided array, is called a pixel transfer operation. These are somewhat complex, but not too difficult to understand.

Each pixel in a texture is more properly referred to as a texel. Since texture data is accessed in OpenGL by the texel, we want our array of normalized unsigned integers to each be stored in a single texel. So our input data has only one value per texel, that value is 8-bits in size, and it represents an normalized unsigned integer.

The last three parameters describe this to OpenGL. The parameter

GL_RED says that we are uploading a single component to the

texture, namely the red component. Components of texels are named after color

components. Because this parameter does not end in “_INTEGER”, OpenGL

knows that the data we are uploading is either a floating-point value or a

normalized integer value (which converts to a float when accessed by the

shader).

The parameter GL_UNSIGNED_BYTE says that each component that we

are uploading is stored in an 8-bit unsigned byte. This, plus the pointer to the

data, is all OpenGL needs to read our data.

That describes the data format as we are providing it. The format parameter, the

third parameter to the glTexImage* functions, describes the

format of the texture's internal storage. This is how OpenGL

itself will store the texel data; this does not have to

exactly match the format provided. The texture's format defines the properties of

the texels stored in that texture:

-

The components stored in the texel. Multiple components can be used, but only certain combinations of components are allowed. The components include the RGBA of colors, and certain more exotic values we will discuss later.

-

The number of bits that each component takes up when stored by OpenGL. Different components within a texel can have different bitdepths.

-

The data type of the components. Certain exotic formats can give different components different types, but most of them give them each the same data type. Data types include normalized unsigned integers, floats, non-normalized signed integers, and so forth.

The parameter GL_R8 defines all of these. The “R”

represents the components that are stored. Namely, the “red” component.

Since textures used to always represent image data, the components are named after

components of a color vec4. Each component takes up “8” bits. The

suffix of the format represents the data type. Since unsigned normalized values are

so common, they get the “no suffix” suffix; all other data types have a

specific suffix. Float formats use “f”; a red, 32-bit float internal

format would use GL_R32F.

Note that this perfectly matches the texture data that we generated. We tell OpenGL to make the texture store unsigned normalized 8-bit integers, and we provide unsigned normalized 8-bit integers as the input data.

This is not strictly necessary. We could have used GL_R16 as

our format instead. OpenGL would have created a texture that contained 16-bit

unsigned normalized integers. OpenGL would then have had to convert our 8-bit input

data to the 16-bit format. It is good practice for the sake of performance to try to

match the texture's format with the format of the data that you upload to OpenGL, so

as to avoid conversion.

The calls to glTexParameter set parameters on the texture

object. These parameters define certain properties of the texture. Exactly what

these parameters are doing is something that will be discussed in the next

tutorial.

OK, so we have a texture object, which has a texture type. We need some way to

represent that texture in GLSL. This is done with something called a GLSL

sampler. Samplers are special types in OpenGL; they represent a

texture that has been bound to the OpenGL context. For every OpenGL texture type,

there is a corresponding sampler type. So a texture that is of type

GL_TEXTURE_1D is paired with a sampler of type

sampler1D.

The GLSL sampler type is very unusual. Indeed, it is probably best if you do not think of it like a normal basic type. Think of it instead as a specific hook into the shader that the user can use to supply a texture. The restrictions on variables of sampler types are:

-

Samplers can only declared at the global scope as

uniformor in function parameter lists with theinqualifier. They cannot even be declared as local variables. -

Samplers cannot be members of structs or uniform blocks.

-

Samplers can be used in arrays, but the index for sampler arrays must be a compile-time constant.

-

Samplers do not have values. No mathematical expressions can use sampler variables.

-

The only use of variables of sampler type is as parameters to functions. User-defined functions can take them as parameters, and there are a number of built-in functions that take samplers.

In the shader TextureGaussian.frag, we have an example of

creating a sampler:

uniform sampler1D gaussianTexture;

This creates a sampler for a 1D texture type; the user cannot use any other type of texture with this sampler.

Texture Sampling. The process of fetching data from a texture, at a particular location, is called sampling. This is done in the shader as part of the lighting computation:

Example 14.3. Shader Texture Access

vec3 halfAngle = normalize(lightDir + viewDirection); float texCoord = dot(halfAngle, surfaceNormal); float gaussianTerm = texture(gaussianTexture, texCoord).r; gaussianTerm = cosAngIncidence != 0.0 ? gaussianTerm : 0.0;

The third line is where the texture is accessed. The function

texture accesses the texture denoted by the first parameter

(the sampler to fetch from). It accesses the value of the texture from the location

specified by the second parameter. This second parameter, the location to fetch

from, is called the texture coordinate. Since our texture has

only one dimension, our texture coordinate also has one dimension.

The texture function for 1D textures expects the texture

coordinate to be normalized. This means something similar to normalizing integer

values. A normalized texture coordinate is a texture coordinate where the coordinate

values range from [0, 1] refer to texel coordinates (the coordinates of the pixels

within the textures) to [0, texture-size].

What this means is that our texture coordinates do not have to care how big the texture is. We can change the texture's size without changing anything about how we compute the texture coordinate. A coordinate of 0.5 will always mean the middle of the texture, regardless of the size of that texture.

A texture coordinate values outside of the [0, 1] range must still map to a location on the texture. What happens to such coordinates depends on values set in OpenGL that we will see later.

The return value of the texture function is a vec4,

regardless of the image format of the texture. So even though our texture's format

is GL_R8, meaning that it holds only one channel of data, we

still get four in the shader. The other three components are 0, 0, and 1,

respectively.

We get floating-point data back because our sampler is a floating-point sampler. Samplers use the same prefixes as vec types. A ivec4 represents a vector of 4 integers, while a vec4 represents a vector of 4 floats. Thus, an isampler1D represents a texture that returns integers, while a sampler1D is a texture that returns floats. Recall that 8-bit normalized unsigned integers are just a cheap way to store floats, so this matches everything correctly.

We have a texture object, an OpenGL object that holds our image data with a specific format. We have a shader that contains a sampler uniform that represents a texture being accessed by our shader. How do we associate a texture object with a sampler in the shader?

Although the API is slightly more obfuscated due to legacy issues, this association is made essentially the same way as with uniform buffer objects.

The OpenGL context has an array of slots called texture image units, also known as image units or texture units. Each image unit represents a single texture. A sampler uniform in a shader is set to a particular image unit; this sets the association between the shader and the image unit. To associate an image unit with a texture object, we bind the texture to that unit.

Though the idea is essentially the same, there are many API differences between the UBO mechanism and the texture mechanism. We will start with setting the sampler uniform to an image unit.

With UBOs, this used a different API from regular uniforms. Because samplers are actual uniforms, the sampler API is just the uniform API:

GLuint gaussianTextureUnif = glGetUniformLocation(data.theProgram, "gaussianTexture"); glUseProgram(data.theProgram); glUniform1i(gaussianTextureUnif, g_gaussTexUnit);

Sampler uniforms are considered 1-dimesional (scalar) integer values from the OpenGL side of the API. Do not forget that, in the GLSL side, samplers have no value at all.

When it comes time to bind the texture object to that image unit, OpenGL again overloads existing API rather than making a new one the way UBOs did:

glActiveTexture(GL_TEXTURE0 + g_gaussTexUnit); glBindTexture(GL_TEXTURE_1D, g_gaussTextures[g_currTexture]);

The glActiveTexture function changes the current texture

unit. All subsequent texture operations, whether glBindTexture,

glTexImage, glTexParameter, etc,

affect the texture bound to the current texture unit. To put it another way, with

UBOs, it was possible to bind a buffer object to

GL_UNIFORM_BUFFER without overwriting any of the uniform

buffer binding points. This is possible because there are two functions for buffer

object binding: glBindBuffer which binds only to the target,

and glBindBufferRange which binds to the target and an indexed

location.

Texture units do not have this. There is one binding function,

glBindTexture. And it always binds to whatever texture unit

happens to be current. Namely, the one set by the last call to

glActiveTexture.

What this means is that if you want to modify a texture, you must overwrite a texture unit that may already be bound. This is usually not a huge problem, because you rarely modify textures in the same area of code used to render. But you should be aware of this API oddity.

Also note the peculiar glActiveTexture syntax for specifying

the image unit: GL_TEXTURE0 + g_gaussTexUnit. This is the correct way

to specify which texture unit, because glActiveTexture is

defined in terms of an enumerator rather than integer texture image units.

If you look at the rendering function, you will find that the texture will always be bound, even when not rendering with the texture. This is perfectly harmless; the contents of a texture image unit is ignored unless a program has a sampler uniform that is associated with that image unit.

With the association between a texture and a program's sampler uniform made, there is still one thing we need before we render. There are a number of parameters the user can set that affects how texture data is fetched from the texture.

In our case, we want to make sure that the shader cannot access texels outside of the range of the texture. If the shader tries, we want the shader to get the nearest texel to our value. So if the shader passes a texture coordinate of -0.3, we want them to get the same texel as if they passed 0.0. In short, we want to clamp the texture coordinate to the range of the texture.

These kinds of settings are controlled by an OpenGL object called a

sampler object. The code that creates a sampler object

for our textures is in the CreateGaussianTextures

function.

Example 14.4. Sampler Object Creation

glGenSamplers(1, &g_gaussSampler); glSamplerParameteri(g_gaussSampler, GL_TEXTURE_MAG_FILTER, GL_NEAREST); glSamplerParameteri(g_gaussSampler, GL_TEXTURE_MIN_FILTER, GL_NEAREST); glSamplerParameteri(g_gaussSampler, GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

As with most OpenGL objects, we create a sampler object with

glGenSamplers. However, notice something unusual with the

next series of functions. We do not bind a sampler to the context to set parameters

in it, nor does glSamplerParameter take a context target. We

simply pass an object directly to the function.

In this above code, we set three parameters. The first two parameters are things

we will discuss in the next tutorial. The third parameter,

GL_TEXTURE_WRAP_S, is how we tell OpenGL that texture

coordinates should be clamped to the range of the texture.

OpenGL names the components of the texture coordinate “strq” rather than “xyzw” or “uvw” as is common. Indeed, OpenGL has two different names for the components: “strq” is used in the main API, but “stpq” is used in GLSL shaders. Much like “rgba”, you can use “stpq” as swizzle selectors for any vector instead of the traditional “xyzw”.

Note

The reason for the odd naming is that OpenGL tries to keep vector suffixes from conflicting. “uvw” does not work because “w” is already part of the “xyzw” suffix. In GLSL, the “r” in “strq” conflicts with “rgba”, so they had to go with “stpq” instead.

The GL_TEXTURE_WRAP_S parameter defines how the

“s” component of the texture coordinate will be adjusted if it

falls outside of the [0, 1] range. Setting this to

GL_CLAMP_TO_EDGE clamps this component of the texture

coordinate to the edge of the texture. Each component of the texture coordinate can

have a separate wrapping mode. Since our texture is a 1D texture, its texture

coordinates only have one component.

The sampler object is used similarly to how textures are associated with GLSL samplers: we bind them to a texture image unit. The API is much simpler than what we saw for textures:

glBindSampler(g_gaussTexUnit, g_gaussSampler);

We pass the texture unit directly; there is no need to add

GL_TEXTURE0 to it to convert it into an enumerator. This

effectively adds an additional value to each texture unit.

Note

Technically, we do not have to use a sampler object. The parameters we use for

samplers could have been set into the texture object directly with

glTexParameter. Sampler objects have a lot of

advantages over setting the value in the texture, and binding a sampler object

overrides parameters set in the texture. There are still some parameters that

must be in the texture object, and those are not overridden by the sampler

object.

This tutorial creates multiple textures at a variety of resolutions. The resolution corresponding with the 1 is the lowest resolution, while the one corresponding with 4 is the highest.

If we use resolution 1, we can see that it is a pretty rough approximation. We can very clearly see the distinction between the different texels in our lookup table. It is a 64-texel lookup table.

Switching to the level 3 resolution shows more gradations, and looks much more like the shader calculation. This one is 256 texels across.

The largest resolution, 4, is 512 texels, and it looks nearly identical to the pure shader version for this object.

[7] This is for demonstration purposes only. You should not undertake this process in the real world unless you have determined with proper profiling that the specular function is a performance problem that you should work to alleviate.