Lighting in these tutorials has ultimately been a form of deception. An increasingly accurate one, but it is deception all the same. We are not rendering round objects; we simply use lighting and interpolation of surface characteristics to make an object appear round. Sometimes we have artifacts or optical illusions that show the lie for what it is. Even when the lie is near-perfect, the geometry of a model still does not correspond to what the lighting makes the geometry appear to be.

In this tutorial, we will be looking at the ultimate expression of this lie. We will use lighting computations to make an object appear to be something entirely different from its geometry.

We want to render a sphere. We could do this as we have done in previous tutorials. That is, generate a mesh of a sphere and render it. But this will never be a mathematically perfect sphere. It is easy to generate a sphere with an arbitrary number of triangles, and thus improve the approximation. But it will always be an approximation.

Spheres are very simple, mathematically speaking. They are simply the set of points in a space that are a certain distance from a specific point. This sounds like something we might be able to compute in a shader.

Our first attempt to render a sphere will be quite simple. We will use the vertex shader to compute the vertex positions of a square in clip-space. This square will be in the same position and width/height as the actual circle would be, and it will always face the camera. In the fragment shader, we will compute the position and normal of each point along the sphere's surface. By doing this, we can map each point on the square to a point on the sphere we are trying to render. This square is commonly called a flat card or billboard.

For those points on the square that do not map to a sphere point (ie: the corners), we have to do something special. Fragment shaders are required to write a value to the output image. But they also have the ability to abort processing and write neither color information nor depth to the color and depth buffers. We will employ this to draw our square-spheres.

This technique is commonly called impostors. The idea is that we're actually drawing a square, but we use the fragment shaders to make it look like something else. The geometric shape is just a placeholder, a way to invoke the fragment shader over a certain region of the screen. The fragment shader is where the real magic happens.

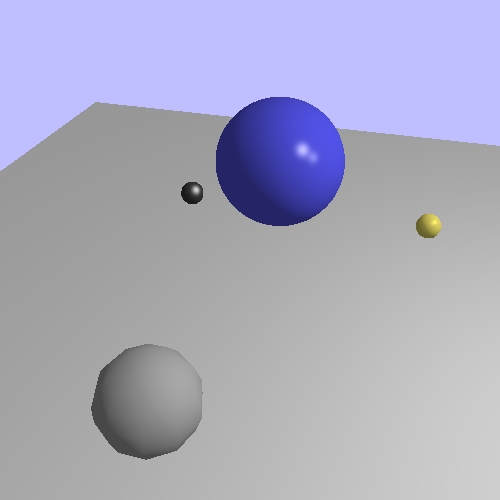

The tutorial project Basic Impostor demonstrates this technique. It shows a scene with several spheres, a directional light, and a moving point light source.

The camera movement is controlled in the same way as previous tutorials. The T key will toggle a display showing the look-at point. The - and = keys will rewind and fast-forward the time, and the P key will toggle pausing of the time advancement.

The tutorial starts showing mesh spheres, to allow you to switch back and forth between actual meshes and impostor spheres. Each sphere is independently controlled:

Table 13.1. Sphere Impostor Control Key Map

| Key | Sphere |

|---|---|

| 1 | The central blue sphere. |

| 2 | The orbiting grey sphere. |

| 3 | The black marble on the left. |

| 4 | The gold sphere on the right. |

This tutorial uses a rendering setup similar to the last one. The shaders use uniform blocks to control most of the uniforms. There is a shared global lighting uniform block, as well as one for the projection matrix.

The way this program actually renders the geometry for the impostors is interesting. The vertex shader looks like this:

Example 13.1. Basic Impostor Vertex Shader

#version 330

layout(std140) uniform;

out vec2 mapping;

uniform Projection

{

mat4 cameraToClipMatrix;

};

uniform float sphereRadius;

uniform vec3 cameraSpherePos;

void main()

{

vec2 offset;

switch(gl_VertexID)

{

case 0:

//Bottom-left

mapping = vec2(-1.0, -1.0);

offset = vec2(-sphereRadius, -sphereRadius);

break;

case 1:

//Top-left

mapping = vec2(-1.0, 1.0);

offset = vec2(-sphereRadius, sphereRadius);

break;

case 2:

//Bottom-right

mapping = vec2(1.0, -1.0);

offset = vec2(sphereRadius, -sphereRadius);

break;

case 3:

//Top-right

mapping = vec2(1.0, 1.0);

offset = vec2(sphereRadius, sphereRadius);

break;

}

vec4 cameraCornerPos = vec4(cameraSpherePos, 1.0);

cameraCornerPos.xy += offset;

gl_Position = cameraToClipMatrix * cameraCornerPos;

}

Notice anything missing? There are no input variables declared anywhere in this vertex shader.

It does still use an input variable: gl_VertexID. This is a

built-in input variable; it contains the current index of this particular vertex.

When using array rendering, it's just the count of the vertex we are in. When using

indexed rendering, it is the index of this vertex.

When we render this mesh, we render 4 vertices as a

GL_TRIANGLE_STRIP. This is rendered in array rendering mode,

so the gl_VertexID will vary from 0 to 3. Our switch/case

statement determines which vertex we are rendering. Since we're trying to render a

square with a triangle strip, the order of the vertices needs to be appropriate for

this.

After computing which vertex we are trying to render, we use the radius-based offset as a bias to the camera-space sphere position. The Z value of the sphere position is left alone, since it will always be correct for our square. After that, we transform the camera-space position to clip-space as normal.

The output mapping is a value that is used by the fragment

shader, as we will see below.

Since this vertex shader takes no inputs, our vertex array object does

not need to contain anything either. That is, we never call

glEnableVertexAttribArray on the VAO. Since no attribute

arrays are enabled, we also have no need for a buffer object to store vertex array

data. So we never call glVertexAttribPointer. We simply

generate an empty VAO with glGenVertexArrays and use it without

modification.

Our lighting equations in the past needed only a position and normal in camera-space (as well as other material and lighting parameters) in order to work. So the job of the fragment shader is to provide them. Even though they do not correspond to those of the actual triangles in any way.

Here are the salient new parts of the fragment shader for impostors:

Example 13.2. Basic Impostor Fragment Shader

in vec2 mapping;

void Impostor(out vec3 cameraPos, out vec3 cameraNormal)

{

float lensqr = dot(mapping, mapping);

if(lensqr > 1.0)

discard;

cameraNormal = vec3(mapping, sqrt(1.0 - lensqr));

cameraPos = (cameraNormal * sphereRadius) + cameraSpherePos;

}

void main()

{

vec3 cameraPos;

vec3 cameraNormal;

Impostor(cameraPos, cameraNormal);

vec4 accumLighting = Mtl.diffuseColor * Lgt.ambientIntensity;

for(int light = 0; light < numberOfLights; light++)

{

accumLighting += ComputeLighting(Lgt.lights[light],

cameraPos, cameraNormal);

}

outputColor = sqrt(accumLighting); //2.0 gamma correction

}

In order to compute the position and normal, we first need to find the point on the sphere that corresponds with the point on the square that we are currently on. And to do that, we need a way to tell where on the square we are.

Using gl_FragCoord will not help, as it is relative to the

entire screen. We need a value that is relative only to the impostor square. That is

the purpose of the mapping variable. When this variable is at (0,

0), we are in the center of the square, which is the center of the sphere. When it

is at (-1, -1), we are at the bottom left corner of the square.

Given this, we can now compute the sphere point directly “above” the

point on the square, which is the job of the Impostor

function.

Before we can compute the sphere point however, we must make sure that we are

actually on a point that has the sphere above it. This requires only a simple

distance check. Since the size of the square is equal to the radius of the sphere,

if the distance of the mapping variable from its (0, 0) point is

greater than 1, then we know that this point is off of the sphere.

Here, we use a clever way of computing the length; we do not. Instead, we compute the square of the length. We know that if X2 > Y2 is true, then X > Y must also be true for all positive real numbers X and Y. So we just do the comparison as squares, rather than taking a square-root to find the true length.

If the point is not under the sphere, we execute something new:

discard. The discard keyword is unique to

fragment shaders. It tells OpenGL that the fragment is invalid and its data should

not be written to the image or depth buffers. This allows us to carve out a shape in

our flat square, turning it into a circle.

The computation of the normal is based on simple trigonometry. The normal of a sphere does not change based on the sphere's radius. Therefore, we can compute the normal in the space of the mapping, which uses a normalized sphere radius of 1. The normal of a sphere at a point is in the same direction as the direction from the sphere's center to that point on the surface.

Let's look at the 2D case. To have a 2D vector direction, we need an X and Y coordinate. If we only have the X, but we know that the vector has a certain length, then we can compute the Y component of the vector based on the Pythagorean theorem:

We simply use the 3D version of this. We have X and Y from

mapping, and we know the length is 1.0. So we compute the Z

value easily enough. And since we are only interested in the front-side of the

sphere, we know that the Z value must be positive.

Computing the position is also easy. The position of a point on the surface of a sphere is the normal at that position scaled by the radius and offset by the center point of the sphere.

One final thing. Notice the square-root at the end, being applied to our

accumulated lighting. This effectively simulates a gamma of 2.0, but without the

expensive pow function call. A sqrt call

is much less expensive and far more likely to be directly built into the shader

hardware. Yes, this is not entirely accurate, since most displays simulate the 2.2

gamma of CRT displays. But it's a lot less inaccurate than applying no correction at

all. We'll discuss a much cheaper way to apply proper gamma correction in future

tutorials.